For webmasters and SEO professionals, alerts from Google Search Console can sometimes be confusing. One such alert is:

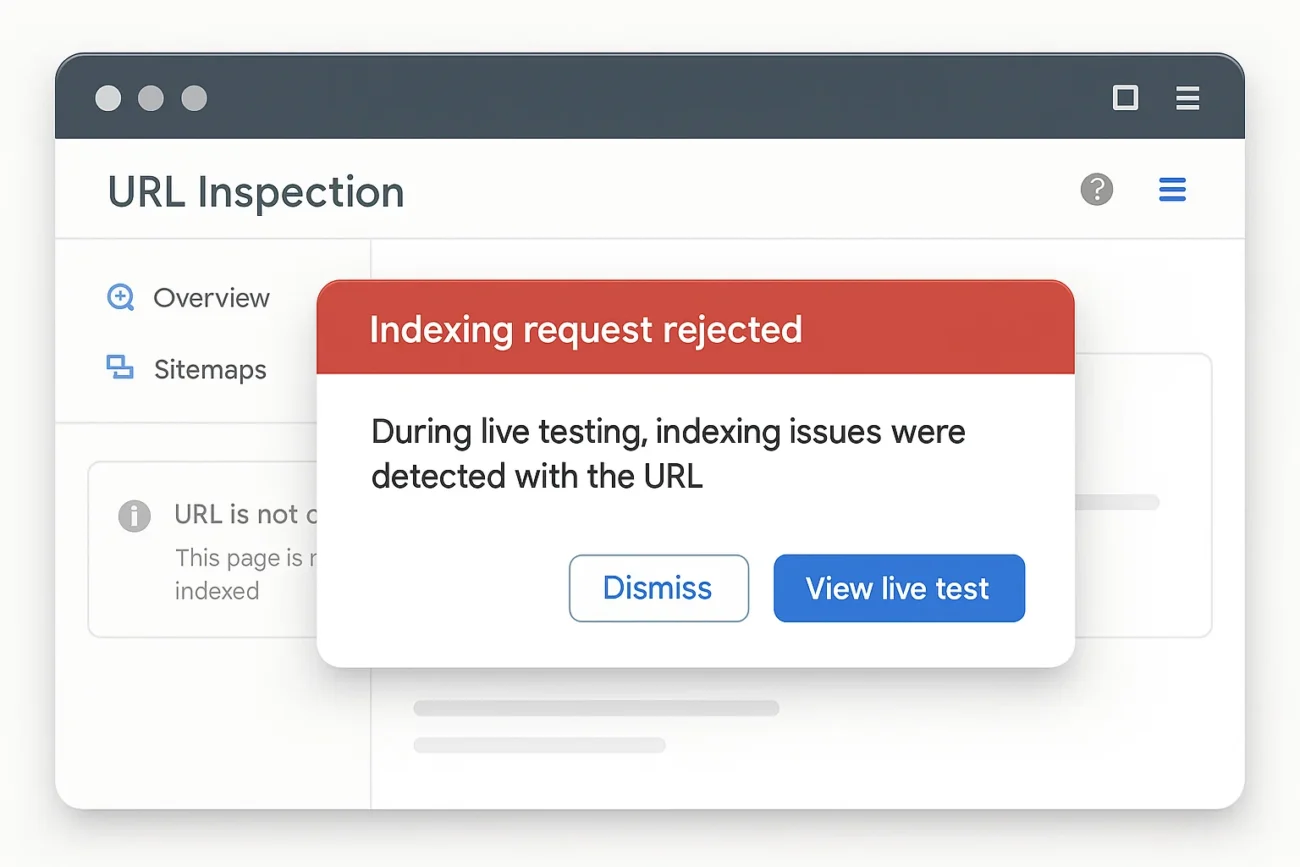

“Indexing request rejected: During live testing, indexing issues were detected with the URL.”

In this blog post, we will walk through what this error means, why it appears, and how you can resolve it step by step. I’ll share practical insights and personal experiences so that you can quickly understand and fix the problem. After each section, you’ll find words of encouragement to keep you motivated.

Table of Contents

1. Understanding the Error and Context

1.1 What Does “Indexing Request Rejected” Mean?

In Google Search Console, the “Indexing Request Rejected” message appears when you manually click “Request Indexing” using the URL Inspection tool, but Google’s live test discovers issues preventing indexing.

- Live Testing: When you click “Test Live URL” in the URL Inspection tool, Googlebot attempts to fetch and render your page in real time to check for any blocking factors—such as server errors, robots.txt rules, or noindex tags.

- Indexing Issues Detected: If Googlebot encounters any obstacles during this live test that would block the page from being indexed, the “Indexing Request Rejected” alert is shown instead of allowing the URL to be added to Google’s index.

1.2 Why Is This Alert Important?

- Impact on Visibility: If important pages are not indexed, they won’t appear in search results, which affects organic traffic and rankings.

- Early Warning: Live testing pinpoints technical problems—server errors, crawl-blocking rules, or noindex directives—that prevent your page from entering Google’s index.

- Required Action: This alert tells you that you need to fix something before resubmitting the URL for indexing.

2. Common Causes of “Indexing Request Rejected”

Below is a table summarizing frequent causes for this error, along with brief descriptions and potential fixes.

| No. | Cause | Description | Possible Fix |

|---|---|---|---|

| 1 | robots.txt Blocking | If your robots.txt file contains a Disallow directive for that URL, Googlebot cannot crawl the page. | Edit robots.txt to remove the Disallow or add an Allow directive for that path. |

| 2 | Noindex Meta Tag or X-Robots-Tag Header | A <meta name="robots" content="noindex"> tag in the HTML or an X-Robots-Tag: noindex HTTP header explicitly tells Google not to index the page. | Remove the noindex directive or change it to index, follow. |

| 3 | Server Error (5xx) During Live Test | If the server returns a 5xx error (e.g., 500 Internal Server Error) or times out during the live test, Google rejects the indexing request. | Check server logs, investigate downtime or high latency, and work with your hosting provider. |

| 4 | Redirect Chains or Loops | If your URL goes through multiple redirects (301/302) or enters an infinite loop, Googlebot can’t properly access the content. | Simplify redirects so there’s only one 301 redirect directly to the final URL. |

| 5 | Canonicalization Issues | If your page’s canonical tag points to a wrong or inaccessible URL, Google may refuse to index it. | Update the <link rel="canonical" href="correct-URL"> to point to the correct, accessible URL. |

| 6 | Blocked Resources (JS/CSS) | If critical CSS or JavaScript files are blocked by robots.txt, Google cannot render the page during live testing, causing a rejection. | Allow those resources in robots.txt or adjust server settings so Googlebot can fetch them. |

| 7 | Mobile-First Indexing Errors | If the mobile version of the page is inaccessible (wrong dynamic serving or responsive design issue), Google’s mobile-first indexing fails. | Ensure the mobile-friendly version is accessible and properly responsive. Test via Google’s Mobile-Friendly Test. |

| 8 | Sitemap Mismatch or Submission Error | If your sitemap is outdated or missing the URL altogether, Google won’t see the latest content and may reject the indexing request. | Update your sitemap.xml to include the URL and resubmit it in Google Search Console. |

3. Step-by-Step Troubleshooting

3.1 Perform a Live Test via URL Inspection

- Sign in to Google Search Console, then open the URL Inspection tool.

- Paste your problematic URL into the search bar and press Enter.

- Click the “Test Live URL” button—this lets Googlebot crawl and render your page right now.

- Outcome Examples:

- If you see a 5xx Server Error, note it.

- If you see “Blocked by robots.txt”, that’s your clue.

- If it says “Detected noindex directive”, check for a meta tag or HTTP header.

- Outcome Examples:

- Review the Live Test Details:

- Coverage: Any crawl errors (5xx, blocked resources, etc.).

- Crawl: Whether Googlebot fetched the page successfully.

- Indexing: If “URL cannot be indexed” appears, it usually lists a specific reason.

3.2 Check Your robots.txt File

- In your browser, go to

https://yourdomain.com/robots.txt. - Look for lines like: pgsqlCopyEdit

User-agent: * Disallow: /path-to-page/ - If your URL’s path appears under a

Disallowdirective, Googlebot is blocked from crawling it.

Fix: Remove or adjust the directive so Googlebot can access that path. For example:

User-agent: *

Allow: /path-to-page/3.3 Inspect Meta Robots Tags and X-Robots-Tag Headers

- View your page’s source code (right-click > View Page Source).

- Search for: htmlCopyEdit

<meta name="robots" content="noindex"> - Or check your HTTP response headers for: makefileCopyEdit

X-Robots-Tag: noindex

Fix: If you find a noindex directive, remove it or change it to:

<meta name="robots" content="index, follow">

Or in your server configuration/headers:

X-Robots-Tag: index, follow3.4 Investigate Server Errors (5xx)

- If the live test shows a 5xx error or a timeout, check your server logs:

- Access your hosting control panel (cPanel, Plesk) or SSH and review error logs.

- Look for spikes in CPU/memory usage or failing processes.

Common Causes of 5xx:

- Resource limitations (CPU/RAM).

- Plugin or module conflicts (especially in content management systems like WordPress).

- Database connection issues.

- Misconfigurations in

.htaccess,nginx.conf, or similar.

Fix Steps:

- Resource Usage: If CPU or RAM is maxed out, optimize your code or upgrade hosting.

- Plugin/Module Conflicts: Temporarily disable newly added plugins/themes and test again.

- Database Health: Repair database tables, ensure the database is accessible without errors.

- Configuration Files: Check for syntax errors in

.htaccess(Apache) or malformed directives innginx.conf(Nginx).

3.5 Examine Redirect Chains and Canonical Tags

- Use a command-line tool or online redirect checker to test your URL: bashCopyEdit

curl -I https://yourdomain.com/your-page - Note any 3xx redirect codes. Ideally, you want only one 301 redirect pointing directly to the final URL.

Fix:

- If the URL goes through multiple steps (A → B → C), update your server so A directly 301-redirects to C.

- Check the

<link rel="canonical">tag. If it points to the wrong URL (e.g., another domain), fix it: htmlCopyEdit<link rel="canonical" href="https://yourdomain.com/your-page">

4. Common Fixes and Ongoing Best Practices

Here are some solutions and general guidelines to simplify your workflow and prevent similar indexing issues in the future.

4.1 robots.txt and Meta Tag Best Practices

- Allow Critical Resources txtCopyEdit

User-agent: * Allow: /wp-content/uploads/ Disallow: /wp-admin/ Sitemap: https://yourdomain.com/sitemap.xmlAllowing your CSS, JavaScript, and images to be crawled ensures Google can render the page correctly during live testing. - Default Meta Robots

On pages you want indexed, ensure your<head>includes: htmlCopyEdit<meta name="robots" content="index, follow">This tells search engines to crawl and include the page in their index.

4.2 URL Structure and Redirect Guidelines

- Use Clean, Readable URLs: arduinoCopyEdit

https://yourdomain.com/blog/indexing-errors-solutions - Avoid Extraneous Query Parameters unless required for functionality.

- Implement Single-Step 301 Redirects:

If you rename or move a page, make sure there’s only one 301 redirect from the old URL to the new URL.

4.3 Optimize Server Performance and Uptime

- Leverage a CDN (e.g., Cloudflare or AWS CloudFront) for static assets to reduce server load and improve response times.

- Implement Page Caching using plugins (e.g., WP Super Cache or W3 Total Cache) or server-level caching (Redis, Varnish).

- Monitor Uptime with tools like UptimeRobot or Pingdom to get instant alerts if your site goes down.

4.4 Ensure Mobile Friendliness and Core Web Vitals

- Responsive Design: Test your pages with Google’s Mobile-Friendly Test.

- Page Speed Optimizations:

- Compress images (WebP format).

- Minify CSS and JavaScript.

- Implement lazy loading for below-the-fold images.

- Optimize Core Web Vitals (Largest Contentful Paint, First Input Delay, Cumulative Layout Shift) to improve user experience and aid indexing.

5. Advanced Debugging Techniques

If you’ve followed the basic steps and still see the “Indexing Request Rejected” error, try these advanced methods:

5.1 Inspecting with curl and HTTP Response Headers

Run a curl command in your terminal to see exactly how the server is responding:

curl -I https://yourdomain.com/your-page --max-time 10You should see something like:

HTTP/2 200

date: Mon, 02 Jun 2025 09:30:00 GMT

content-type: text/html; charset=UTF-8

x-robots-tag: index, follow- HTTP/2 200: Confirms the server is returning a successful status code.

- x-robots-tag: index, follow: Ensures there’s no noindex directive.

5.2 Use Chrome DevTools to Simulate Crawling

- Open the page in Chrome and press F12 to launch DevTools.

- Go to the Network tab and reload the page (Ctrl+R).

- Look for any blocked resources (CSS, JS, fonts) or large resource sizes that might slow down rendering.

- Switch to the Console tab to see any JavaScript errors that could be preventing page rendering.

5.3 Google Search Console’s Index Coverage Report

- In Google Search Console, go to Index > Coverage.

- Look under the Excluded tab to filter by reasons such as “Discovered – currently not indexed,” “Crawled – currently not indexed,” or “Redirect error.”

- For each excluded URL, note the specific reason and address it following the earlier troubleshooting steps.

6. Prevention and Long-Term SEO Best Practices

Being proactive will reduce the chance of future indexing issues. Here are some ongoing best practices:

6.1 Maintain a High-Quality XML Sitemap

- Automatically Generate your sitemap:

- For WordPress: Use plugins like Yoast SEO, Rank Math, or All in One SEO.

- For non-WordPress sites: Use a sitemap generator tool or script.

- Include Only Canonical URLs in the sitemap—exclude duplicate or low-value pages.

- Update Dynamically: Ensure new pages are added automatically as they go live.

- Submit in Google Search Console: Under Sitemaps, enter

https://yourdomain.com/sitemap.xmland click Submit.

6.2 Leverage Structured Data (Schema Markup)

- Implement JSON-LD for relevant schema types: articles, breadcrumbs, FAQs, product details, etc.

- Use the Rich Results Test to verify your markup.

- Benefits: Rich snippets can improve click-through rates, and Google often crawls and indexes pages with structured data more confidently.

6.3 Perform Regular Crawlability Audits

- Use tools like Screaming Frog or Ahrefs Site Audit to scan your site monthly.

- Check for:

- Broken links (4xx errors)

- Redirect chains or loops

- Duplicate meta descriptions or titles

- Missing alt attributes on images

- Address any issues promptly to keep Googlebot’s journey smooth.

6.4 Keep Content Fresh and Updated

- Review Older Pages: If a page’s information is outdated, update it with fresh content, or consolidate it into a more comprehensive post.

- Establish an Update Schedule: Decide to revisit important pages every few months.

- Manual Indexing Requests: For brand-new or significantly updated pages, run a live test and request indexing so Googlebot picks up changes faster.

7. Key Takeaways and Next Steps

- Understand the Error: “Indexing request rejected” means Google’s live test found a problem blocking indexing.

- Prioritize Live Testing: Always start with the “Test Live URL” feature in the URL Inspection tool to see real-time errors.

- Technical Checks to Run:

- robots.txt rules

- Meta robots tags and X-Robots-Tag headers

- Server status (5xx errors)

- Redirect chains and canonical tags

- Fix, Retest, and Request Indexing: After resolving the issue, run the live test again. Once it passes, click “Request Indexing.”

- Ongoing Prevention: Maintain a proper sitemap, use structured data, ensure mobile-friendliness, optimize page speed, and perform regular SEO audits.

Conclusion

This blog post provides a comprehensive walkthrough of the “Indexing request rejected: During live testing, indexing issues were detected with the URL” error—from understanding what triggers it to detailed troubleshooting steps, fixes, and preventive best practices. Each section is grounded in practical advice and firsthand experience so you can implement solutions confidently.

Remember These Core Steps:

- Run a live test with the URL Inspection tool to identify the blocking issue.

- Check and fix robots.txt rules, meta robots tags, server errors, redirects, and canonical tags.

- Re-run the live test, then click “Request Indexing” once the page can be crawled and rendered properly.

- Maintain healthy SEO practices—keep your sitemap up to date, use structured data, ensure mobile-friendliness, optimize page speed, and perform regular audits.

Frequently Asked Questions (FAQ)

Q1: Is it safe to ignore “Indexing Request Rejected,” or will Google automatically retry indexing?

A1: When you manually click “Request Indexing,” it triggers Google’s prioritization queue. If Google rejects it, the page may still be considered in its routine crawling schedule. However, the best practice is to fix the issue and resubmit manually to ensure it’s indexed quickly.

Q2: What does “Crawled – currently not indexed” mean, and how is it different?

A2: “Crawled – currently not indexed” means Googlebot successfully fetched the page but chose not to index it—often due to low-quality or duplicate content. Improving content quality, adding internal links, and resubmitting can help.

Q3: Do indexing issues directly affect my SEO ranking?

A3: Yes. If an important page is not indexed, it won’t appear in search results, which means lost visibility and potential traffic. Ensuring all valuable pages are indexed is crucial for maximizing organic reach.

Q4: What if live testing shows “URL unavailable on request”?

A4: That typically indicates Googlebot couldn’t access the URL—possibly due to server firewall settings, DNS issues, or hosting restrictions. Work with your hosting provider or DevOps team to ensure Googlebot can reach your server without obstruction.

Q5: How do I handle mobile-first indexing problems?

A5: Google primarily evaluates your mobile version. If your mobile site serves errors or is inaccessible, fix any responsive design or dynamic serving issues. Use the Mobile-Friendly Test to verify and address errors.